Note: This blog post is provided for informational purposes only. It is not intended to be a substitute for legal advice. As such, we recommend that you consult a lawyer before acting on any matter discussed within this post.

This year we're extremely excited to make Artificial Intelligence (AI) a central part of our annual Codegeist Unleashed hackathon. AI is driving a seismic transformation that has the potential to dramatically accelerate what teams can achieve. It also creates a huge opportunity for developers and ecosystem partners to build new functionality that we only dreamed of a couple of years ago.

The importance of using AI responsibly

AI is a powerful tool, and it has the ability to shape our world for good. However, like many powerful tools, its usage shouldn't be taken lightly. AI isn't perfect, and when we build apps that use AI, we need to take into account certain safety and usage guardrails to prevent adverse effects to end users.

At Atlassian, we live our mission and values in everything we do. We use our recently published Responsible Technology Principles to guide our thinking when building, deploying and using new technologies like AI.

These principles also provide a great framing for how you can use AI responsibly in your Codegeist and Marketplace apps. Thinking about using tech responsibly will not only help you build a better app, you might also win one of five prizes for the responsible use of AI! Let's dive in.

Transparency

No BS Openness is foundational to Atlassian — one of our core values is Open Company, No Bullshit. It's important that anyone who wants to make the most of new technologies is equipped with the right information to do so.

We believe that the disclosure of AI usage is a critical part of using it responsibly. Your app and its documentation should be very clear about:

- The models or third-party AI providers you're using.

- The customer data that is being processed by AI and for what purpose.

- The situations where users will interact with AI in your app.

Your user experience should also make it clear when users are interacting with AI. If users know when they're interacting with AI — and what to be on the lookout for — they can be more vigilant in spotting issues like biased output or incorrect information. This is particularly true when you are closely mimicking human behaviour (e.g. chatbots) or producing output that looks human-generated (e.g. LLMs).

Setting clear expectations about what your app can and can’t do well is also an important part of demonstrating transparency.

Example: Imagine you're building an app that pre-populates project posters for project kick offs based on pages in a Confluence site. You realise during testing that your app does a great job generating text describing the problem space etc. but frequently invents or misstates statistics and KPIs. Perhaps you might want to include a short statement that customers should "double-check any numbers!", this would help customers understand the limitations of the tool.

Trust

Build for trust Trust is at the heart of our work and our products: if someone doesn't trust our company, they won't use our products or want to work here. This extends to the technologies that underpin and power our products and our work.

It requires a significant amount of trust from customers to install your application. They need to know that their data is in safe hands. When it comes to AI, a particular concern is whether their data will be used for training/improving AI models that will benefit (or even leak data to) other customers.

We strongly encourage you to only use providers that don't use customer data for training their models. Read and understand the privacy and data retention policies of your chosen provider, and make those policies available to your customers so that they can understand them for themselves.

When it comes to personalising the output of AI models, we'd encourage you to explore in-context learning before training models with customer data (fine-tuning). So far in our experience with Atlassian Intelligence, we have found that well-crafted prompts will get you the results you want without incurring the expense or delay of fine-tuning.

There are also some general data privacy best practices that are especially important when it comes to AI:

- Minimise the amount of data you're sending to third-party AI providers.

- Don't send Personally Identifiable Information (PII) to third-party AI providers

- Don't use customer data when developing and testing your app

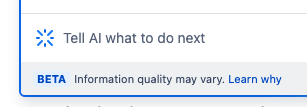

You should also be aware of the emerging security threats introduced by AI. The OWASP Foundation have recently published the OWASP Top 10 for Large Language Model Applications | OWASP Foundation which is a great starting point for learning about threats and how you can prevent attacks.

Example: Imagine you're building an app that automatically labels JSM tickets based on some customer-defined categories. Your first thought might be to export all the tickets from the site, along with their labels, and use those to fine tune an AI model for each customer. A better option is probably to use the JSM API to retrieve a small sample of tickets from each category then use those as examples in your prompt each time you ask the LLM to categorise a new ticket.

Accountability

Accountability, as a team At Atlassian, we know a thing or two about collaboration and teamwork. Our products are powered by our own people, upon the foundational technologies that we use to deliver them — and, of course, by how our customers' teams choose to use them

As an app developer, you play an important role in the broader ecosystem of stakeholders who share responsibility and accountability for using AI responsibly. You can't control everything, but there are things you can do to minimise the likelihood of bad outcomes.

Strike the right balance of human involvement, oversight and control when designing your user experience. Keeping a "human in the loop" is one way to control for potentially problematic uses and consequences.

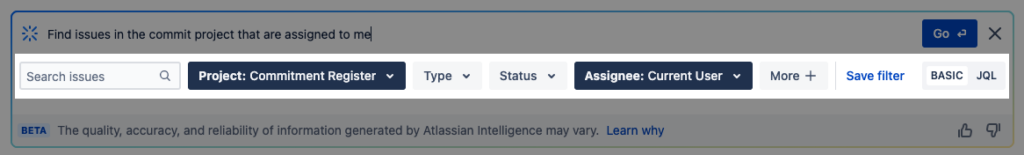

You can also gather and act upon feedback from your app. A "thumbs up" or "thumbs down" is an easy way to do this.

If your implementation of AI is a bit more automated and behind the scenes, consider leaving an audit trail of actions taken by AI so humans can review them after the fact. This could be as simple as leaving comments on the Jira issues or Confluence pages that were modified.

Example: Imagine that you are developing a chatbot that utilizes OpenAI as the underlying AI model. Although OpenAI has controls in place to minimize the amount of toxic or offensive content that can be generated, these controls are not foolproof. It is important to establish a reporting process for any results that manage to slip past these controls and use the feedback to enhance future versions of your application.

Human-centricity

Human-(em)powered At Atlassian, we want our company and our technologies to be open, inclusive, fair and just: to reflect the human-centric values and fundamental human rights that we all share. Our journey to build responsibly reflects this aim.

Think about how all users experience your app and all the people that will be impacted. AI technology can be biased and unfair, so think about how you might correct or control that. Working with diverse and inclusive teams can help you uncover any blind spots.

When working with vendors and technology partners, make sure that they demonstrate values that align with Atlassian's and your own. How would using them reflect on your own reputation?

Example: Imagine you're building an app that uses AI to help managers identify employees that deserve kudos or recognition based on their Jira or Bitbucket history. The use of metrics such as LOC, commits, and story points to measure productivity are a controversial topic in engineering teams. Could this lead to negative behaviour? Would it discourage junior team members, new starters, part timers? Perhaps you need to layer in more data or human involvement to mitigate the potential impact.

Empowerment

Unleashing potential, for everyone We know that behind every great human achievement, there is a team. We also believe that new technologies can help empower those teams to achieve even more. If we use these technologies (like AI) responsibly and intentionally, then we can supercharge this vision and contribute to better outcomes across our communities.

For your app to be truly valuable to its users (including their customers and colleagues) you need to think through the ways in which it could be used and misused. It's your responsibility to anticipate and try to control for bad outcomes and to drive towards good outcomes.

It's also important to go beyond the immediate use case to consider all potential uses and consequences. This will help to maximise value and minimise risks (including reputational and legal risks).

To do this, you could try asking yourself: How would a supervillain use your app? What are the three worst-case headlines that might result from someone using your app? How can you protect against these things?

Example: Imagine you're building a generative AI tool that creates graphics for Confluence blogs to make them more fun and engaging. Think through how it might be used to create toxic, unlawful or just plain unwanted content.

AI content moderation probably isn't something you want to build yourself, but the models and provider you choose could make a big difference. Also see if there are additional tools you can take advantage of (e.g. OpenAI's moderation endpoint) to make AI interactions safer.

Now get building!

All this talk of responsible AI may seem daunting, but the best way to understand it will be to experiment and try things out. Codegeist is a hackathon after all, and we don't expect things to be perfect.

If you start building your app with these principles in mind, you have the best possible chance to make a great Codegeist submission. And if you plan to list your app in the Marketplace, you'll be on the path to do the right thing for your future customers.

Do you have a clever idea for how AI could be integrated in Atlassian's tools and products? Then sign up for Codegeist Unleashed and get your submission in by the 24th of October. You could win a share of $172,500 in prizes and even a trip to Amsterdam. Good luck!