The problem space – decision making

Decision making with the right combination of robustness and speed is a challenge, but improving the process can have a dramatic impact. Within Atlassian, if we need to make a decision, we create a DACI page in Confluence. DACI stands for Driver Approver Contributors Informed which is detailed further in the Atlassian Team Playbook. We love DACIs so much that we even created a DACI Confluence app that simplifies the creation of DACI pages.

If you're unfamiliar with the DACI framework, you may like to read up on it, but for the purpose of this post you really only need to think of a DACI as a Confluence page with a structure encouraging the adherence to a decision making process. This post also refers to the term DACI driver which is the person who is taking responsibility for ensuring the DACI process is followed.

Decision making helper app

Since all DACI pages are based on a template provided by the DACI Confluence app and a process outlined in the DACI play, I figured I would be able to improve our decision making by creating an app that helps us follow best practices.

After a short amount of development, I quickly realized that the most difficult aspect of developing the app would be the parsing of pages. Consequently, I needed to have a quick development loop whilst I implemented the parsing logic so I ensured the logic was encapsulated by a utility that could easily be unit tested.

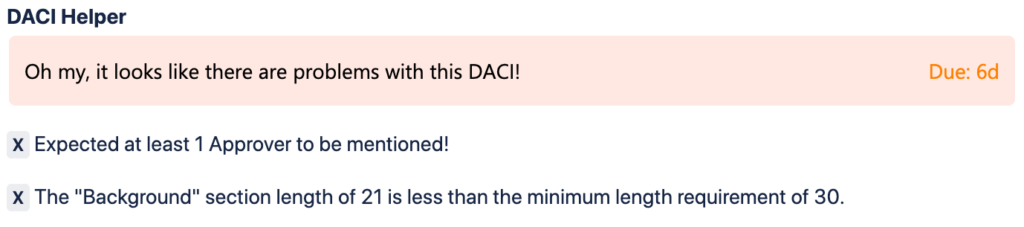

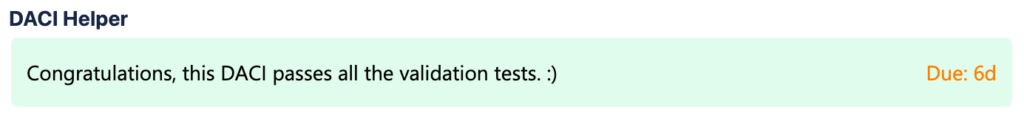

The combination of unit testing plus the Forge tunneling feature provided me with an efficient development flow, allowing me to implement the page parsing and DACI best practices logic rather quickly. I then added an editor macro to allow the Forge app to surface the results into DACI pages that the app is inserted into. The editor macro displays best practice messages such as:

- Sufficiency of supporting material.

- Suggestions about the identification of a driver, approver(s), etc.

- Suggestions about the identification of due date and warning of due date approach or overdue.

- Documentation of outcomes.

The macro also renders a banner summarising the status. At the time of developing the macro, Forge provided a fairly limited set of components so I implemented the banner by dynamically creating an SVG and including it as the src attribute of the Image component. Using an SVG allowed me to color the banner red or green depending on the number of issues found. This kind of workaround will not be necessary when Forge supports more composability options and possibly higher-order components such as banners and cards. In fact, whilst writing this blog, Forge has released several UI components that would have helped here.

When creating the SVG representing the banner, I ran into a problem whereby the SVG would not display. It turned out that this was due to using hexadecimal coded colors in the SVG, but not URL encoding the SVG. The URL encoding is necessary since the SVG is passed in the Image src parameter. The following snippet shows how to create the Forge Image component with an SVG:

<Image

src={`data:image/svg+xml;utf8,${encodeURIComponent(svg)}`}

alt="..."

/>Speaking of colors, I used the Atlassian design color palette to ensure the banner conformed to the Confluence styles as much as possible. Other aspects of the SVG can also look out of place if left to their defaults so I found I had to explicitly set a few properties such as the text size to 14px and the text stroke-width to 0px. Suffice to say, I would not recommend using SVG to mimic Confluence content elements, but it is a powerful feature that can unlock capability.

The DACI Helper app code has been open-sourced at atlassian/forge-daci-helper . You can follow the instructions at https://developer.atlassian.com/platform/forge/example-apps/ to install it in your Confluence tenant, but you will need to be in the Forge beta program first.

Promoting an app with an app

With the DACI helper app built, I then felt I needed to build awareness of it. Ideally, I would be able to modify the DACI template so that the app's macro is inserted into every new DACI page, however, the app had not yet proven itself useful so I figured that would be a little premature. More importantly, however, the DACI template is a feature of the DACI Confluence app which would be installed in tenants that the DACI Helper app is not installed in so Confluence would display an error indicating the macro is unavailable. Note, however, it is technically trivial to insert a Forge app into a Confluence template and is a great way to distribute apps within a tenant that relate strongly to certain kinds of content.

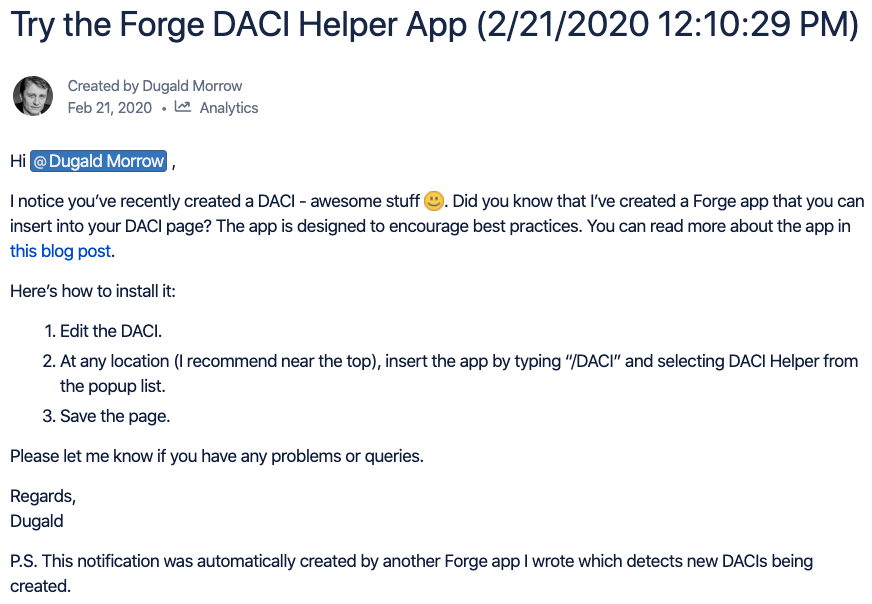

My approach to promoting the DACI helper app involved building another Forge app with the purpose of creating awareness of the DACI helper app.

This new app, DACI Helper Promoter, listens to page publish events to detect the creation of DACI pages. Within Atlassian, we use the convention of prefixing all our decision pages with "DACI:". The page title is provided in the event payload so this simple prefix check is inexpensive and avoids unnecessary load on APIs, etc.

When the app detects a DACI page has been published, it creates a child page that informs the driver of the DACI that they may wish to insert the DACI Helper app in their DACI page. The content of the page provides an introduction to the DACI Helper app and also explains the page was automatically created by the DACI Helper Promoter app. The automatically generated page also mentions the driver of the DACI so they receive an email notification, bringing their attention to the page.

Of course, I had to be careful not to notify any given person more than once because this would be very annoying. For this reason, the DACI Helper Promoter app had to keep a log of everyone who had been informed. Forge doesn't yet have its own storage mechanism so the app stores the log using Confluence entity properties. I was also careful with my rollout of the app by initially only enabling it for the Confluence spaces that my team was active in. With growing confidence, I gradually enabled it for more spaces.

I was encouraged by receiving a number of likes by various DACI drivers for the auto-generated pages and observing the DACI Helper app's macro being installed in a number DACI pages. This also provided me with some valuable feedback such as a fix to ensure the app supports being inserted into draft pages. This fix involved passing the query parameter status=any when fetching the page content.

Although the overwhelming feedback was positive, I subsequently thought of an alternate approach to pursue.

Decision making statistics app

At this point I had built code that could:

- Parse DACI pages and generate various insights into DACIs.

- Detect all DACI page publish events.

- Store and retrieve data using Confluence entity properties.

I figured it would not take much effort to refactor the code to build an app that collects statistics about DACI pages. These statistics would hopefully provide some insights into decision-making behaviors and possibly lead to improvements in the guidelines.

To avoid complicating the original open-source DACI helper app, I created a new Forge app and copied over the DACI page parsing logic. I called this new app DACI stats.

Like the DACI Helper Promoter app, the DACI stats app also has a Confluence trigger that listens for page publish events. Forge supports the subscription to a growing list of different types of events from Atlassian products. Forge's reliable function based runtime provides the ideal mechanism to process events in near real-time.

The initial product trigger logic was very similar to the DACI Helper Promoter app and also started its processing by filtering out pages that do not start with "DACI:". The product trigger processing then retrieves the page, parses it and records information about the page in the form of an analytics record. Once again, this app stores data using Confluence entity properties. Note that I could have used an external storage mechanism, but I wanted to keep the data within Confluence rather than egressing it to an external database.

I used a Forge environment variable to identify the content ID of the page to store the properties against. Forge environment variables can be set on a per environment basis so this approach allowed me to test the app using a different page on my test tenant to the page I intended to use in the Confluence tenant we use at Atlassian for all of our work.

The analytics events contain information such as:

- timestamp

- days since the first draft was created

- content ID

- space URL

- space key

- length of the title

- status

- etc

Each Confluence entity property has a size limit of 32KB, but there is no limit to the number of entity properties that can be stored against a piece of content. For these reasons, I chose to store each DACI analytics event in a separate entity property.

With this code implemented, I deployed the app to the Forge production environment and installed it in the Confluence tenant we use at Atlassian for all of our work and it started logging the analytics to content properties. However, the app didn't yet have any features that provided insights or summary level information so I needed to develop a way to summarise data spread over all the content properties. After only a few days, the app had stored over 200 DACI analytics events so it was clear the next challenge was going to relate to the ability to query the data in order to summarise it. This challenge is due to Forge's 10 second invocation timeout combined with the fact that the APIs to retrieve Confluence entity properties are quite limited. A single entity property can be retrieved by its ID and multiple entity properties can be retrieved by specifying an offset and limit. With my ever growing number of entity properties storing analytics events, the app was going to have to make multiple queries to retrieve and process all the analytics events. It was obvious that it would be only a matter of time that this processing would exceed Forge's 10 second invocation timeout.

I thought about modifying the app so that it continuously computes the summary data as each analytics event is recorded, however, I didn't have a clear idea about which information would be necessary to summarise. I also figured there would be an ongoing need to process the raw analytics events to provide new insights. In addition, some statistics operations such as computing the median are difficult or impossible to compute in an incremental manner.

To solve this, I added a webtrigger to the Forge app to form the basis of a long running task.

When the web trigger is invoked with a GET request, it initializes some context for the long running task to execute in. This context is used to store the information necessary to compute the summary data. It also stores information about where the task is up to so that it can retrieve the entity properties in small batches to avoid the chance of any given web trigger processing exceeding the Forge 10 second timeout. At the end of each web trigger invocation, it sends a POST request to the same webtrigger with the body containing the long running task context. Once all the analytics events have been retrieved, the final webtrigger invocation performs the summary computations and stores the summary data in a separate Confluence entity property.

Similar to identifying the content ID to store the entity properties against, I stored the webtrigger URL in a forge environment variable so the app could invoke the correct web trigger depending on the environment it was running in.

When I was developing the long running task, I was paranoid about creating an infinite loop of webtrigger invocations so I implemented a simple circuit breaker based on a pseudo random number. The processing stops if the number is below a certain value which I set to result in roughly a 2% probability. This was low enough that I could loop through all of my test data reasonably reliably. Sometimes I would see the circuit breaker trip and I'd have to start the task again. Forge has some abuse prevention logic, but this circuit breaker logic was trivial to add and provided peace of mind.

After deploying my app to production once more, I discovered the long running task stopped part way through. It turned out that the app was accumulating too much context data for POSTing to the next iteration of the long running task. My development environment only had a small amount of data so I never hit that problem. I fixed this by culling unnecessary data, but I am aware that this issue will likely rise again as the app collects more statistics.

A note on trust and environments. To restrict unnecessary access of app developers to personal and user generated data, Forge does not allow app developers to see logs from the production environment, nor tunnel in the production environment. This will soon be bolstered by the introduction of a permissions based scheme that will require apps to declare their intention to use certain capabilities such as being able to egress data. These are important features for building trust in Forge apps by customers, but can be problematic when problems occur in the production environment, but not in the development environment. To minimize impact, ensure the data you test against in your development environment is realistic and covers as many cases as possible. Also ensure your test/development data is similar in terms of quantity/volume where you believe your app may be pushing processing limits.

The final step involved adding a macro to the app so that the summary data could be displayed. I developed the macro in my test tenant with the aid of tunneling. Once happy with the macro, I deployed the app to the production environment in seconds with forge deploy -e production, however, the macro had a bug causing it to crash.

I found I had to disable the functionality I had introduced until the macro worked again. This process allowed me to narrow down the problematic code. To avoid this kind of problem, make sure your development environment has sufficiently realistic data that covers a variety of use cases.

Decision making insights

The DACI statistics app has only been running for a short time so there is not yet enough data to provide accurate insights, however, this blog is more concerned with demonstrating how Forge apps can illuminate insights into an organization’s behavior. For this reason, the following should be interpreted as preliminary insights.

Completion data

The app calculates the duration to make a decision by comparing the time that the DACI transitions to the DECIDED state with the time it first detects the DACI being drafted. Since this requires the entire lifecycle of a decision to be monitored, the app has not yet collected enough information to provide informative data. So far, however, the median time to make a decision is about 6 days.

Overdue decisions

An important part of the DACI decision making framework involves defining a date by which the decision is due. The statistics collected thus far indicate that about 4% of our decisions are overdue.

Incorrect status

The DACI Confluence app suggests the valid DACI statuses are NOT STARTED, IN PROGRESS and DECIDED. The DACI helper app introduces another status of OBSOLETE, however, 31% of DACIs have some other status. One of the more interesting alternate statuses was "READY FOR INPUT ?".

Decision options

The median number of options for our decisions is 3, but up to 6 options have been observed in some decisions.

Pros and cons

Most of our decisions lump the pros and cons of each option together, however, a small number of DACI drivers have gone to the effort of splitting out pros and cons into distinct considerations to ensure each option is classified against each consideration.

Guideline breaches

Confluence does not enforce the DACI process so it is not surprising that breaches of the DACI guidelines exist. On average our DACIs breach one third of the guidelines that are checked.

Summary

The statistics show that our DACI process is rather loose, but effective at making decisions in a timely manner. There is an opportunity to improve adherence to the process and possibly introduce some kind of change to reduce the number of overdue decisions.

Ideas for similar types of Forge apps

OKR tracking and analysis

Does your organization capture OKRs in either Jira or Confluence? If so, an app could be created which captures and analyses OKR data. If you use Confluence to capture OKRs, you could consider creating a Confluence OKR template and inserting the app in it to ensure it is automatically added to each of your new OKR pages.

Team health tracking

Use the team health monitors plays to record the health of your teams from time to time. Apps such as the Leadership Team Health Monitor will ensure your various teams capture results in a consistent format which will then allow a Forge app to capture trends across teams.

Forge experience

Overall, developing in Forge was productive and a lot of fun. Here's a short summary of the best features of Forge and those areas that I'm sure will improve in the near future.

Best features

- Forge is completely free for app developers. Forge hosts and runs developer’s apps, but does not pass on any of the costs.

- Forge apps can be created, deployed and installed in seconds using the Forge CLI.

- Dealing with the Confluence Cloud REST API was dramatically simplified by Forge taking care of the authentication. This can be a real challenge when using Connect, especially if you are crafting your JWTs without a library such as ACE. Forge provides the same convenience for the Jira Cloud REST API.

- Forge's tunneling feature provides a nice short dev loop in which you can debug using Chrome DevTools.

- Processing events is a snap for Forge apps. It is simple to wire up an event handler and have it running in seconds without any concerns about scaling to handle peak loads.

Room for improvement

- The 10 second invocation timeout imposed by the Forge runtime poses challenges that may be difficult to work around.

- UI component composability is limited at the moment and would be alleviated by the introduction of higher level components such as banners and cards.